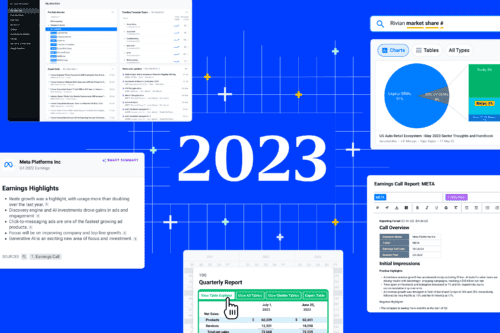

Product15+ min read

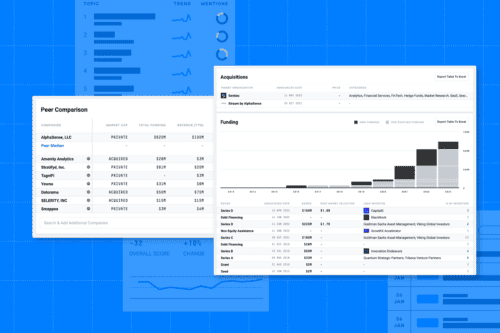

How to Conduct Tech Market Research

The technology industry is evolving at a rapid clip as new innovations, trends, and market players emerge every day. For...