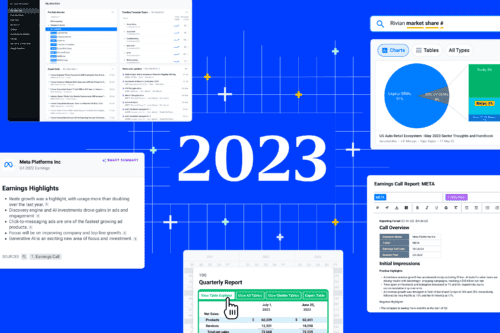

Trends15+ min read

Technology Industry Trends and Outlook for 2024

Over the past few years, the technology industry has experienced dramatic highs and lows. 2020 and 2021 were marked by...